Demo lab: 2-Node Hyper-v Part 2

This is part two of demonstrating the process of rolling out a 2-node, hyperconvered Hyper-v cluster. This post assumes you’ve completed the prior post and have successfully rolled out the lab environment from part one. Additionally, it assumes you’ve got a functional Active Directory Domain, which I’ve written up as a lab environment in this post. In this post we’re going to get our nodes joined up to the domain, configure storage, and get a VM running on the cluster.

Add to domain

We’ll start out by adding the nodes to the domain. You can do this through sconfig, but if you’d rather do it via the command line, here’s the process:

# Use your own domain here

$domain = 'win.hrkrdr.com'

$password = 'DoMaiNAdmInPa$$w0rd!' | ConvertTo-SecureString -asPlainText -Force

$username = 'WIN\administrator'

# Craft a credential object here

$cred = New-Object System.Management.Automation.PSCredential($username,$password)

Add-Computer -DomainName $domain -Credential $cred -OUPath "ou=Servers,dc=win,dc=hrkrdr,dc=com" -Restart

Now that both nodes are joined to the domain and rebooted, let’s validate our configuration.

# Adjust the ReportName path to your needs.

Test-Cluster hyperv1,hyperv2 -Include 'Storage Spaces Direct',Inventory,Network,'System Configuration' -ReportName 'C:\Users\administrator\Documents\Initial_Report'

Once that completes an HTML document called Initial_Report.htm will be created. You’ll want to open this document in a browser and deal with any issues outlined before moving forward. The document is very straightforward and should include descriptions and links regarding common issues. Once you’ve made changes, run Test-Cluster again until you’re satisfied with the results.

Then it’s time to create the new cluster. You’ll want to give it a unique computer name on the network, as well as provide a static IP address for it on the Management vlan. We also need to configure the quorum settings for the cluster. Since there are only two nodes, we need to add another ‘tie breaker’ to ensure that the cluster remains operational. There are many ways of configuring a quorum witness, but the easiest in my opinion is to use a file share witness. Other possibilities, such as a CloudWitness, might be more attractive, especially if you’re extending Azure services into your datacenter. For our purposes I have created a share on one of the Domain Controllers that allows write access to the node’s Computer Objects in Active Directory.

New-Cluster -Name 'Hyperv' -Node hyperv1,hyperv2 -NoStorage -StaticAddress 172.16.30.7

# Add a quorum witness

Set-ClusterQuorum -NodeAndFileShareMajority '\\dc0\quorum' -Cluster 'hyperv'

For clarity, let’s rename the networks in our new cluster to better reflect their SET and vlan membership:

# Make sure you know which of these is which!

Get-ClusterNetwork

Name State Metric Role

---- ----- ------ ----

Cluster Network 1 Up 30384 Cluster

Cluster Network 2 Up 70384 ClusterAndClient

Cluster Network 3 Up 30385 Cluster

(Get-ClusterNetwork -Name "Cluster Network 3").Name="Storage-101"

(Get-ClusterNetwork -Name "Cluster Network 1").Name="Cluster-100"

(Get-ClusterNetwork -Name "Cluster Network 2").Name="Management-0"

Now that we’ve got some basics out of the way, it’s time to dig into Storage Spaces Direct. If you’re using entirely new hardware (meaning your disks have never been used) you should see a list of devices with CanPool equalling true when you run Get-PhysicalDisk:

Get-PhysicalDisk

Number FriendlyName SerialNumber MediaType CanPool OperationalStatus HealthStatus Usage Size

------ ------------ ------------ --------- ------- ----------------- ------------ ----- ----

2 SATA SSD JJ1233445566778899 SSD False OK Healthy Auto-Select 59.63 GB

2004 INTEL SSD PJJ123344556677889 SSD True OK Healthy Auto-Select 894.25 GB

1000 WDC SATA WDPJJ1233445566778 HDD True OK Healthy Auto-Select 2.73 TB

1000 WDC SATA WDPJJ1233445566778 HDD True OK Healthy Auto-Select 2.73 TB

1000 WDC SATA WDPJJ1233445566778 HDD True OK Healthy Auto-Select 2.73 TB

If not, you’ll need to clear your disks before proceeding. There are a variety of strategies to doing so, diskpart being the most tried and true. Once the disks you wish to use for Storage Spaces Direct are able to pool, let’s do some pre-work to ensure Storage Spaces Direct configures itself appropriately.

We’re going to configure Fault Domain Awareness. I suggest reading through the documentation to fully understand what this configuration does. Performing these steps is recommended by Microsoft (even though it doesn’t really reflect our physical reality) to ensure the underlying mechanisms of Storage Spaces Direct work correctly.

# Top of the chain is our 'Site', which can be whatever you want

New-ClusterfaultDomain -Type Site -Name 'HomeLab'

# Next are racks, which our nodes will have to themselves

New-ClusterFaultDomain -Type Rack -Name 'Bench-Rack-1'

New-ClusterFaultDomain -Type Rack -Name 'Bench-Rack-2'

# Now some chassis definitions

New-ClusterFaultDomain -Type Chassis -Name 'Bench-Chassis-1'

New-ClusterFaultDomain -Type Chassis -Name 'Bench-Chassis-2'

# Let's associate all this disparate parts

Set-ClusterFaultDomain -Name 'Bench-Rack-1' -Parent 'HomeLab'

Set-ClusterFaultDomain -Name 'Bench-Rack-2' -Parent 'HomeLab'

Set-ClusterFaultDomain -Name 'Bench-Chassis-1' -Parent 'Bench-Rack-1'

Set-ClusterFaultDomain -Name 'Bench-Chassis-2' -Parent 'Bench-Rack-2'

Set-ClusterFaultDomain -Name 'hyperv1' -Parent 'Bench-Chassis-1'

Set-ClusterFaultDomain -Name 'hyperv2' -Parent 'Bench-Chassis-2'

# Verify

Get-ClusterFaultDomain

Name Type ParentName ChildrenNames Location

---- ---- ---------- ------------- --------

HomeLab Site

Bench1 Rack HomeLab

Bench2 Rack HomeLab

bench-chassis-1 Chassis Bench1 hyperv1

bench-chassis-2 Chassis Bench2 hyperv2

hyperv1 Node bench-chassis-2

hyperv2 Node bench-chassis-1

Now enable Storage Spaces Direct on the cluster

Enable-ClusterStorageSpacesDirect

# Cluster shared volume FriendlyName and Size should be set to meet your requirements

New-Volume -StoragePoolFriendlyName "S2D*" -FriendlyName CSV-1 -FileSystem CSVFS_ReFS -Size 3TB

New-Volume -StoragePoolFriendlyName "S2D*" -FriendlyName CSV-2 -FileSystem CSVFS_ReFS -Size 3TB

# Verify

Get-Volume

Get-Volume -FriendlyName csv*

DriveLetter FriendlyName FileSystemType DriveType HealthStatus OperationalStatus SizeRemaining Size

----------- ------------ -------------- --------- ------------ ----------------- ------------- ----

csv-2 CSVFS_ReFS Fixed Healthy OK 2.95 TB 3 TB

csv-1 CSVFS_ReFS Fixed Healthy OK 2.98 TB 3 TB

# Check out your new ClusterStorage

Get-ChildItem C:\ClusterStorage

Directory: C:\ClusterStorage

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----l 6/11/2021 10:26 AM csv-1

d----l 6/11/2021 10:24 AM csv-2

Now we’ll setup our Hyper-v server defaults:

# Make sure you're using the CSV attached to the right hosts!

Set-VMHost -ComputerName hyperv1 -VirtualHardDiskPath 'C:\ClusterStorage\csv-1\vhd'

Set-VMHost -ComputerName hyperv1 -VirtualMachinePath 'C:\ClusterStorage\csv-1'

Set-VMHost -ComputerName hyperv2 -VirtualHardDiskPath 'C:\ClusterStorage\csv-2\vhd'

Set-VMHost -ComputerName hyperv2 -VirtualMachinePath 'C:\ClusterStorage\csv-2'

# Enable Live-Migration

Enable-VMMigration -ComputerName hyperv1,hyperv2

$liveMigration = @{

MaximumVirtualMachineMigrations = 4

MaximumStorageMigrations = 4

VirtualMachineMigrationPerformanceOption = SMB

ComputerName = 'hyperv1','hyperv2'

}

Set-VMHost @liveMigration

We need to hop onto a domain controller and enable constrained delegation between the nodes to allow for migrations:

# First Node

$node = 'hyperv2'

$domain = 'win.hrkrdr.com'

Get-ADComputer hyperv1 |Set-ADObject -Add @{

"msDS-AllowedToDelegateTo" = "Microsoft Virtual System Migration Service/$node.$domain",

"cifs/$node.$domain","Microsoft Virtual System Migration Service/$node", "cifs/$node"

}

# Second Node

$node = 'hyperv1'

Get-ADComputer hyperv1 |Set-ADObject -Add @{

"msDS-AllowedToDelegateTo" = "Microsoft Virtual System Migration Service/$node.$domain",

"cifs/$node.$domain","Microsoft Virtual System Migration Service/$node", "cifs/$node"

}

# Finally, configure the nodes migration type

Set-VMHost –Computername hyperv1,hyperv2 –VirtualMachineMigrationAuthenticationType Kerberos

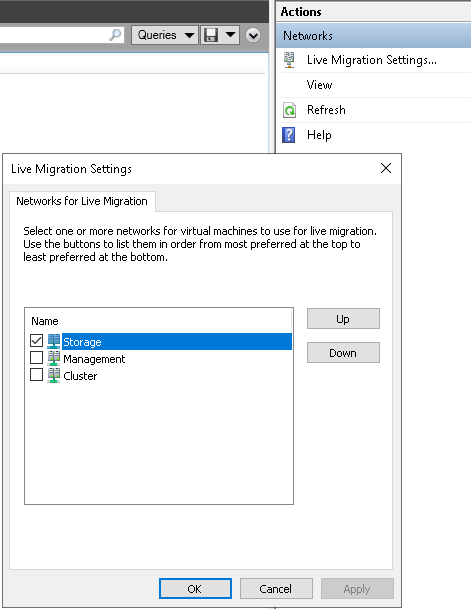

Now, after all that powershell, we’re ready to open up Failover Cluster Manager on our bastion host. First thing’s first, navigate to Networks and change Live Migration settings to limit it to the right networks:

Now’s a great time to try failing over nodes. To ensure that Storage Spaces Direct is doing it’s magic, you can use powershell:

Get-StorageSubSystem cluster* | Get-StorageJob

Now you can start spinning up other VMs and working in your highly available lab!